Evidence shows that data is at the heart of supply chain management. As a basic element of the flow of information it makes it an intrinsic part of how we define supply chains: the global networks that supply goods and services via the organised flow of information, physical goods and money.

From the use of ERP packages to IoT flows in real time allowing optimisation and detailed monitoring, data is omnipresent in supply chain processes. However, this abundance of information is not synonymous with overall flow optimisation. Since data is often stored in specialised software, siloed between different services or simply difficult to access for business teams, data-driven supply chains are struggling to deliver their promises.

The supply chain: added-value creator

The different crises faced by global supply chains in recent years have revealed at least one common denominator across all companies; the need to reconfigure the links in the chain to adapt to changing demand and changes in resource availability.

To meet this challenge, one approach is particularly effective: the agile development and deployment of targeted, high-value use cases to support operations. Regardless of their format, different solutions help to bridge the larger-scale functional gaps in software; performance indicators, customised business planning tools, AI models, low-code applications, automated workflows, etc.

In this type of architecture, existing software, such as ERP, APS, WMS and TMS, still form the basis of the supply chain management process. There is no doubt about the relevance of this software in managing both reference data and transactional data, as their functionalities have been proven for decades.

To ensure agility today, these monolithic solutions need to be supplemented with targeted tools. The benefits of these tools may include the following:

- More effective supply chain processes (for example, using a tailored forecasting algorithm to improve forecast reliability)

- Competitive advantage (for example, a new inventory management service from the supplier made possible by forecasting shortages at SKU level)

- Rapid development and deployment (within weeks or months) and

- Continuous updates to meet the latest requirements of the supply

Data science applied to the supply chain

If this approach proves to be relevant for all operational processes (purchasing, finance, HR, production, etc.) the supply chain functions will provide the ideal ground for high-value use cases. At Argon & Co, we think that data science technologies and tools are a key element of this approach: data visualisation tools, data platforms, advanced analyses, AI algorithms, machine learning, etc.

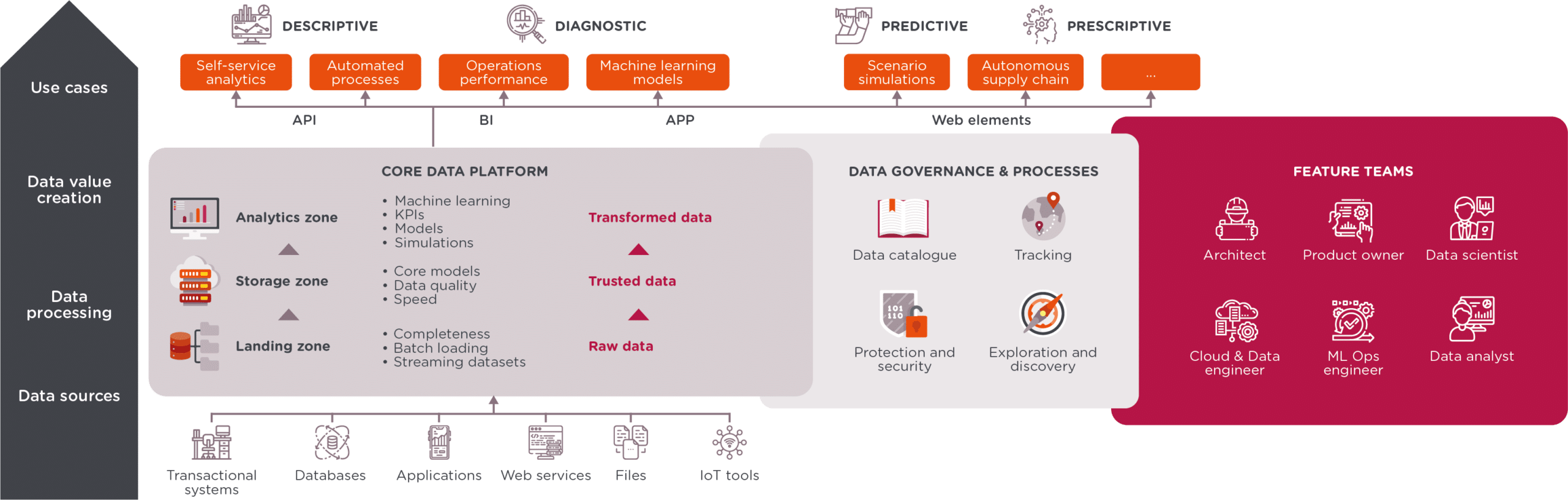

From a conceptual point of view, the data-driven solutions for the supply chain fall into four distinct categories, in ascending order of maturity or complexity:

- Descriptive: g. a stock status report across all platforms and stores at a glance

- Diagnostic: e.g. the link between the delayed supply of a given raw material and the customer orders which will be affected further down the supply chain

- Predictive: g. probabilistic forecasting of short-term demand

- Prescriptive: e.g. a recommendation to place an additional order with a secondary supplier due to (i) a likely increase in demand, (ii) a predicted delay with the primary supplier and (iii) a low overall stock level for the corresponding finished products

In the examples mentioned above, you can see that the more mature use cases utilise intelligence from the lower-level applications. However, even the less advanced use cases often present a direct return on investment, before serving as a springboard to more advanced applications.

More specifically, the previous diagram illustrates a series of use cases in the supply chain that integrate data science technologies we have encountered and deployed in our customers’ organisations. This list is neither exhaustive, nor specific to a particular sector.

The importance of data platforms

Data platform: a springboard for use cases.

This use case-based approach relies on data; its existence, availability, quality and accessibility. We usually notice a large gap between regular data management and the requirements of this approach. As such, the data platform, at company level, is introduced as a set of concepts and tools aimed at bridging this gap, by offering and sustaining the company’s use cases. We believe that this kind of platform must combine technological components, specialist teams and adaptive data governance, acting as a “single source of truth” for all the company’s tools and use cases.

Expectations addressed by the data platforms.

The data and supply chain teams share the same aspirations for the enterprise data platform:

- To create a single source of truth for each business area, by using well-designed data For example, the “sales forecasting” item must be developed with the expertise of forecasters before being made available to other participants in the supply chain, not forgetting purchasing, marketing, merchandising and product development managers

- To connect numerous data sources, extracting them from registration systems and making them available for numerous uses. For example, allowing visibility from beginning to end across a global supply chain by taking in data from all national ERP systems

- To create a semantic layer between existing IT and analytical tools, and therefore to separate software and IT projects from data use cases. For example, an APS tool can be deployed without having to completely redefine the key performance indicators of supply and demand planning

What are the consequences for the company and the teams?

If the return on investment that the target use cases can offer is no longer in doubt, the enterprise data platform also offers a fundamental advantage; to serve as the basis of a new, more data-driven enterprise culture.

In this field, the supply chain teams probably have a head start due to their data analysis skills. Not to mention that Excel has been the supply chain analyst’s main weapon for decades. Better still, the supply chain teams are known for being among the first to implement Data Warehouses and Data Lakes and for relying heavily on the use of business intelligence tools.

We can go further still by providing these teams highly accessible data that is cross-functional. Combined with modern and powerful tools, this facilitates flexible data exploration, analysis and modelling. Therefore, the concept of “self-service analysis” for business users is achieved by tools, such as:

- Data visualisation software (Power BI, Tableau, Qlikview, Looker, etc)

- Collaborative SQL editors coupled with notebooks and/or spreadsheet software (Databricks, Azure Data Studio, PopSQL, etc)

- All-in-one platforms that can also support the deployment of AI models (Dataiku, Alteryx, DataRobot, etc)

To achieve this vision, supply chain teams must collaborate closely with their corresponding feature team (a subset of the data team that specialises in the supply chain domain).

On the one hand, the data teams can “push” the more complex use cases based on their specialist profiles (data engineers, data scientists, user interface experts, etc). For example, this could be a machine learning based algorithm to predict the sales potential of new product, or a model to predict delivery times from suppliers and sub-contractors.

On the other hand, the supply chain teams can create their own use cases from the database models provided by the data teams. For example, this could be a stock policy simulation model and the stock analysis dashboard that accompanies it.

In conclusion, modern data technologies and data science have a lot to offer supply chain teams by producing high-value added use cases that guarantee adaptability.

The enterprise data platform is a vision that must be aspired to by operational teams and data/IT teams that have the means to do so.

Published originally in French for ADD12 magazine